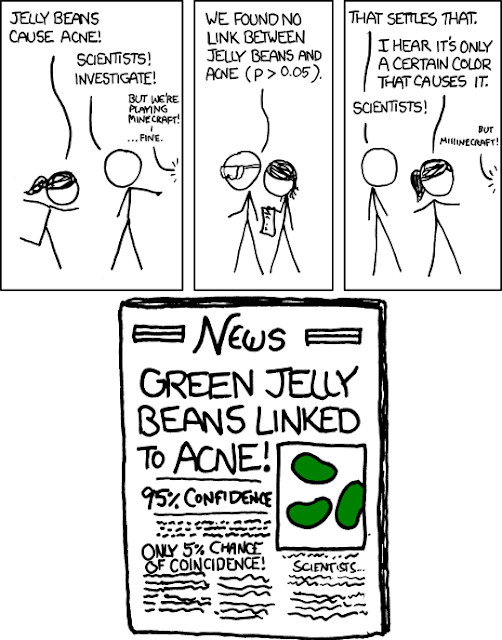

As an undergraduate I rarely thought to scrutinize data

presented in scientific papers. I always assumed the data presented was honest

and accurate. In my mind it had to be in order to get published. It wasn’t

until starting graduate school and becoming involved in various journal clubs

that I learned to better analyze data presented, and determine not only if the

data supported conclusions being made, but also how results were generated. As

I became cognizant of this, I began to notice that some of the grand

conclusions made in various papers were not fully supported by the results

published. Taking it a step further, I also began to notice how scientists

around me would manipulate conditions and data to better support their

hypotheses. I fully agree with the point made in the article “Why you can’talways believe what you read in scientific journals” regarding the pressure to

publish in high impact journals as an indication of scientific success. This

pressure can not only compromise the quality of work in my opinion, but it can

also lead to a compromise in integrity. Pressure on the PI leads to pressure

for scientists in the lab. This stress can even create an environment of fear

when presenting data that does not fit the theories proposed. Another point,

made in Scientific American, touched

on the issue of reproducibility in research, and how in many cases research is

irreproducible. The article also addressed the fact that this irreproducibility

is swept under the rug due to the fear of public opinion and funding

opportunities. I feel that if less emphasis is put on “perfect research”,

research that proves theories, scientists may feel freer to actually perform

unbiased research.

While peer-review can prove useful in trying to combat unreliable

research, I agree with implementing other methods, such as the 18-point

checklist implemented by Nature and

its sister publications and the post-publication review site PubPeer, to help

rebound this apparent downward trend in the integrity of scientific research.

This secondary accountability may help scientists to better design experiments

and self-correct so as to not jeopardize credibility.